Does AI Help or Hurt Human Radiologists’ Performance? It Depends on the Doctor

New research shows radiologists and AI don’t always work well together

New research shows radiologists and AI don’t always work well together

At a glance:

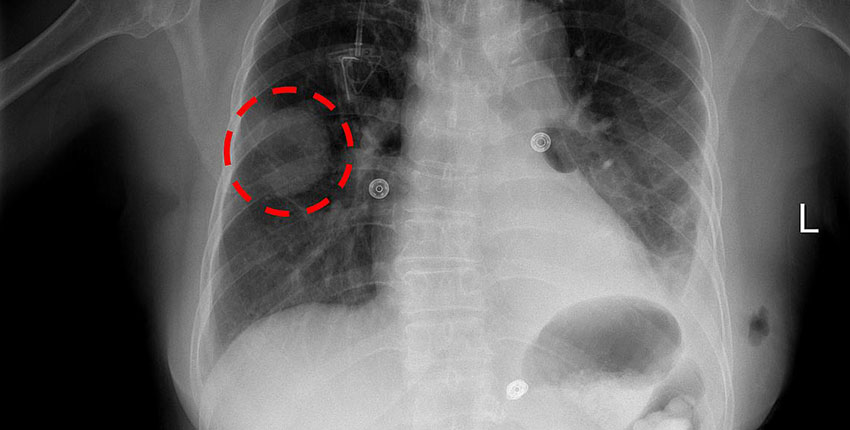

One of the most touted promises of medical artificial intelligence tools is their ability to augment human clinicians’ performance by helping them interpret images such as X-rays and CT scans with greater precision to make more accurate diagnoses.

But the benefits of using AI tools on image interpretation appear to vary from clinician to clinician, according to new research led by investigators at Harvard Medical School, working with colleagues at MIT and Stanford.

The study findings suggest that individual clinician differences shape the interaction between human and machine in critical ways that researchers do not yet fully understand. The analysis, published March 19 in Nature Medicine, is based on data from an earlier working paper by the same research group released by the National Bureau of Economic Research.

In some instances, the research showed, use of AI can interfere with a radiologist’s performance and interfere with the accuracy of their interpretation.

“We find that different radiologists, indeed, react differently to AI assistance — some are helped while others are hurt by it,” said co-senior author Pranav Rajpurkar, assistant professor of biomedical informatics in the Blavatnik Institute at HMS.

“What this means is that we should not look at radiologists as a uniform population and consider just the ‘average’ effect of AI on their performance,” he said. “To maximize benefits and minimize harm, we need to personalize assistive AI systems.”

© 2025 by the President and Fellows of Harvard College