How Good Is That AI-Penned Radiology Report?

Scientists design new way to score accuracy of AI-generated radiology reports

Scientists design new way to score accuracy of AI-generated radiology reports

At a glance:

AI tools that quickly and accurately create detailed narrative reports of a patient’s CT scan or X-ray can greatly ease the workload of busy radiologists.

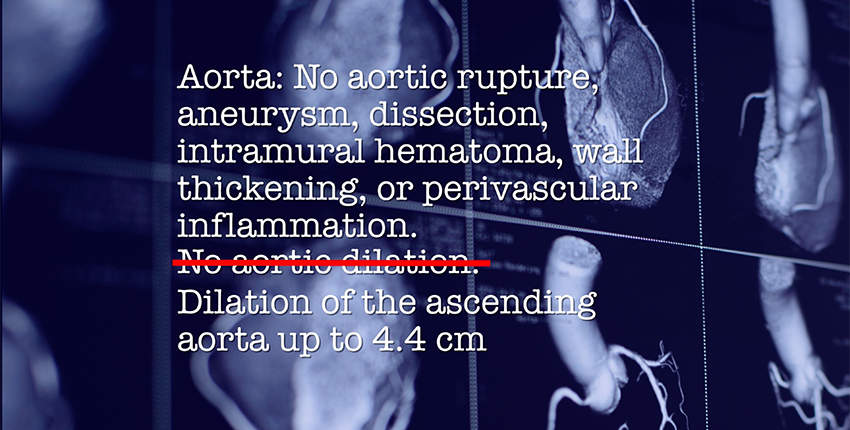

Instead of merely identifying the presence or absence of abnormalities on an image, these AI reports convey complex diagnostic information, detailed descriptions, nuanced findings, and appropriate degrees of uncertainty. In short, they mirror how human radiologists describe what they see on a scan.

Several AI models capable of generating detailed narrative reports have begun to appear on the scene. With them have come automated scoring systems that periodically assess these tools to help inform their development and augment their performance.

So how well do the current systems gauge an AI model’s radiology performance?

The answer is good but not great, according to a new study by researchers at Harvard Medical School published Aug. 3 in the journal Patterns.

Ensuring that scoring systems are reliable is critical for AI tools to continue to improve and for clinicians to trust them, the researchers said, but the metrics tested in the study failed to reliably identify clinical errors in the AI reports, some of them significant. The finding, the researchers said, highlights an urgent need for improvement and the importance of designing high-fidelity scoring systems that faithfully and accurately monitor tool performance.

The team tested various scoring metrics on AI-generated narrative reports. The researchers also asked six human radiologists to read the AI-generated reports.

The analysis showed that compared with human radiologists, automated scoring systems fared worse in their ability to evaluate the AI-generated reports. They misinterpreted and, in some cases, overlooked clinical errors made by the AI tool.

“Accurately evaluating AI systems is the critical first step toward generating radiology reports that are clinically useful and trustworthy,” said study senior author Pranav Rajpurkar, assistant professor of biomedical informatics in the Blavatnik Institute at HMS.

© 2025 by the President and Fellows of Harvard College